We know the story of the AI-native telco by now – and how it underpins the need to break out of current operating constraints to be able to build new business models, and benefit from innovative technology in the network.

This week, many telcos were grappling with the issue at Ericsson’s OSS BSS Summit in London – and several of them identified a common requirement for how they get from where they are now, to where they want to be. The good news is there seems to be agreement on what they want. The less good news is that there’s a lot of work remaining to get there.

First, back to that orthodoxy. Here’s an expression of it from Mats Karlsson, VP and Head of Solution Area Operations and Business Support Systems.

“Autonomous networks are how we can deliver the new business paradigm. It’s not possible to deliver differentiated services at scale with a network that is not fully automated. If you want to have SLAs, you need a high degree of automation in the network,” he said.

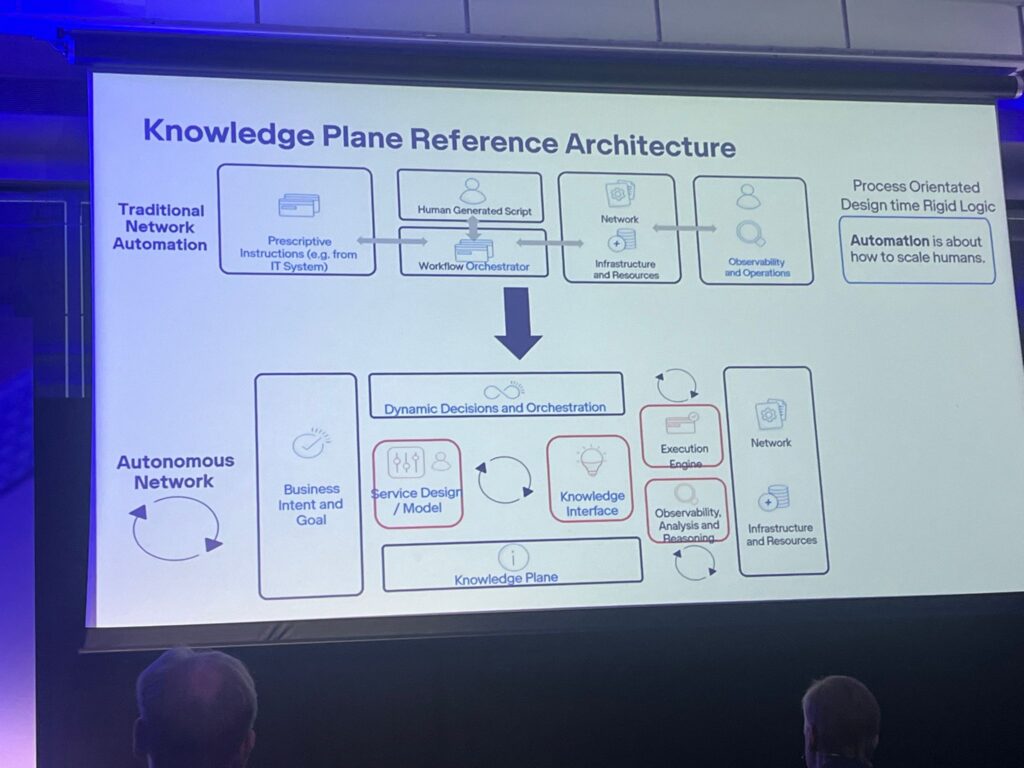

The autonomous networks vision means being able to define what we in the industry have learned to call the business intent and, with closed loop controls, automatically create the policies to orchestrate the resources required for that intent. Doing that right into a live production network is pretty foolhardy, so this approach also requires modelling the changes you are about to make via a digital twin of your network. And that in turn requires the digital twin, therefore, to be an accurate, real time representation of the dependencies and relationships in your network. After all, what do you do if the resources required to deliver one “intent” clash with the resources required to stay within the SLA parameters you have set for another customer.

As Ericson’s Karlsson said, this requires both knowledge management – how you understand network data and translate it into actions – and observability, which gives you a real time view of the network.

The telco requirement: knowledge, topology – not just data

And it is this piece that is still exercising telcos. They know where they are: lots of data, diversely coded and structured, and/or residing in silos. And they know where they want to get to: being able to standardise and structure that data so that they can understand it in a way that gives them real time knowledge of the network.

It’s the bit in between that represents the nub of the matter. They need to explode the “magic happens here” bit of the architecture between data collection and automated action into a series of line items, tools and processes that can create that automated, closed loop, intelligent system.

Naturally, that could include large amounts of AI with agents that observe, detect, propose, evaluate and act. Gen AI technologies can provide a conversational interface for the humans that are involved in the process.

Ericsson outlined its agentic AI strategy earlier this year, and Karlsson said again, “This is not science fiction. It is something here and now, we need to prove the industrialisation potential of automated networks.”

But even if it’s not science fiction, telcos at the event identified the gap in the vision.

Orange’s end-to-end topology view

Houda Mansour, Head of automation for networks, Orange Group, said that by 2030 Orange’s aim is to have fully autonomous management with closed loop remediations, minimal field interventions, predictive maintenance and intent-based management – all delivered by a much leaner team.

This would cut current diagnostics times for a fault such as a fibre cut down from an average three and a half hours to something that is immediate in time frame.

Although this is five years into the future, “This is not futuristic,” she said, reflecting Karlsson’s science fiction take. But she did outline the work Orange still has to do.

One key problem is data – and Orange, like all operators, has a lot of it: currently a million pieces of equipment with an individual profile, a petabyte of data generated daily, 2 million alarms a day and 1,000 billion CDRs generated every day.

Building a foundation for automation from that data will require “data democracy” – a unified view of data across disparate systems. The second foundation is “structurisation” of the data by digital twins to give enhanced visibility, proactive performance and management to be able to take strategic decisions.

A digital twin could model a business intent into network policies automatically, with closed loops self healing, remediation and verification. But digitial twins need accurate, complete, real time data to create real time modelling across multiple domains. Houda said that Orange is exploring graph based solutions, an end to end view of the topology that can represent nodes and dependencies.

You say topology, I say ontology…

Telstra’s Mark Sanders, Chief Architect & Executive Autonomous Networks Enablement, asked himself the question, “What would it take for 2026 to be remembered as the year networks became truly intelligent?” and then delivered three answers.

First would be to solve the challenges of intent-based systems, which is how to manage conflicts and competing resource optimisation. Second, securing trust in AI, where there is “probably not” trust in letting AI run closed loop systems yet.

And third, and perhaps most interesting, is where he identified the need for a foundation for reasoning and inferencing. This requires being able to provide context and understanding across diverse systems and agents, which moves from modelling data to modelling knowledge. Sanders termed this capability the “Knowledge Plane”, which would be built by coding data so that it revealed its dependencies and relationships within the topology. This Knowledge Plane underpins an ontology that provides a unified context for automation.

Ontology, he said, would provide a structured, explainable knowledge Plane that grounds agents, unifies domains and “converts autonomy into new customer value.”

If 2025 has been the year of Agentic AI, developing this Knowledge Plane would move 2026 to being the year of Ontology, Sanders said.

But Sanders is not 100% certain who builds this, or how, and he said that he is on the lookout for partners with similar views to help define and deliver this part of the puzzle. That could be within the sort of forums that TM Forum already offers.

DT – promising but no breakthroughs

Another operator looking for answers is Deutsche Telekom, whose Marcus Müller laid out a similar looking problem statement to both Sanders and Houda.

Another operator looking for answers is Deutsche Telekom, whose Marcus Müller laid out a similar looking problem statement to both Sanders and Houda.

Müller, VP Lab & Automation, DT Germany, said that the company has up to 80 distinct service chains. For reference, something as complex as its entire VoLTE service represents one service chain. Each chain has a dedicated team responsible for running hardware, application sotware, management systems and the vendor-specific EMS within the chain.

That lies behind the operator’s decision to move to a horizontal digital architecture that is agnostic to the service applications, with a common OSS assurance and automation platform that form a set of integrated and harmonised tools, managed by just one team.

However, while this architecture provides many benefits – for instance being able to update new software across 20 million customers in just minutes – it also introduces new problems of accountability and root cause detection. Instead of there being just one owner of a service chain, there could be 10-15 people involved in detection. It also means that a change in infrastructure could affect many more services than before, which makes it critical to gain an understanding relationships and dependencies in the network.

The important thing we learned is that none of the potential vendors can process data in real time across different sources.

To get around this, what DT wants is a Kafka based bus where all its information is available, taking and assessing and correlating relevant data from each service chain to see where the problem lies, and then to assess which services are dependent on any changes made to address the problem.

So Muller needs a central “data lakehouse” to store all his data, and he wants a transparent system that provides full observability across the network.

Again, AI and ML technologies are the foundation. AI can help greatly with the hated task of documentation, with knowledge graphs to automate topology detection, and keep those permanently updated.

But for providing that live, relational view of the network, Müller says DT has not been able to find one system that has given it a breakthrough. It has trained some models with data dumps and received some promising feedback, but nothing groundbreaking, in his words.

“The important thing we learned is that none of the potential vendors can process data in real time across different sources. All confirmed the need for a central data lakehouse as the basis for observability and service chain assessment.

“Our idea was that somebody collects all the data in real time and tells us what happened. None have been able to do so, and everybody claimed we need a single source of truth because other ideas weren’t working.”

Muller agreed that this is a similar expression of requirement to Sanders’ plea for Topology intelligence. And in fact is it also similar to Orange’s Houda’s requirement for an end to end view of network topology and dependencies.

A re-imagined OSS as a catalyst for innovation

BT’s Styf Sjöman: “More important is the strong link between the re-imagined role of OSS-BSS and the ability to innovate on top of these networks.”

So on a day when one partner speaker (a hyperscaler) said that what would be useful would be for telos to agree and settle on some common requirements, there was indeed a common theme. It was just a big one: build observability, structure it into topological systems that gives your automation engines an ontological live view and understanding of the network, and accordingly gives you the trust and confidence in those engines.

This is the target for the big vendors, and their partners. Telcos will lead and will want to maintain ownership and sovereignty of their data and systems, but they cannot build this by themselves. Observability, real time v modelling and validation, a trusted context for agents AI, controlled by intuitive interfaces – these are the targets for the new OSS of the future. And if that sounds like orthodoxy and apple pie, then that’s also the sound of the industry trying to turn itself around, innovate and take advantage of innovation.

As Gabriela Styf Sjöman, Managing Director Research and Networks Strategy, BT Group, and host operator of the Summit said in her closing comments:

“OSS/BSS is the central engine that creates and translates data into intelligent insights and the ability to act on them. But more important is the strong link between the re-imagined role of OSS-BSS and the ability to innovate on top of these networks, and as a catalyst to product innovation.”